Environment Perception in Autonomous Driving

interpreting high-dimensional feature spaces of convolutional neural networks

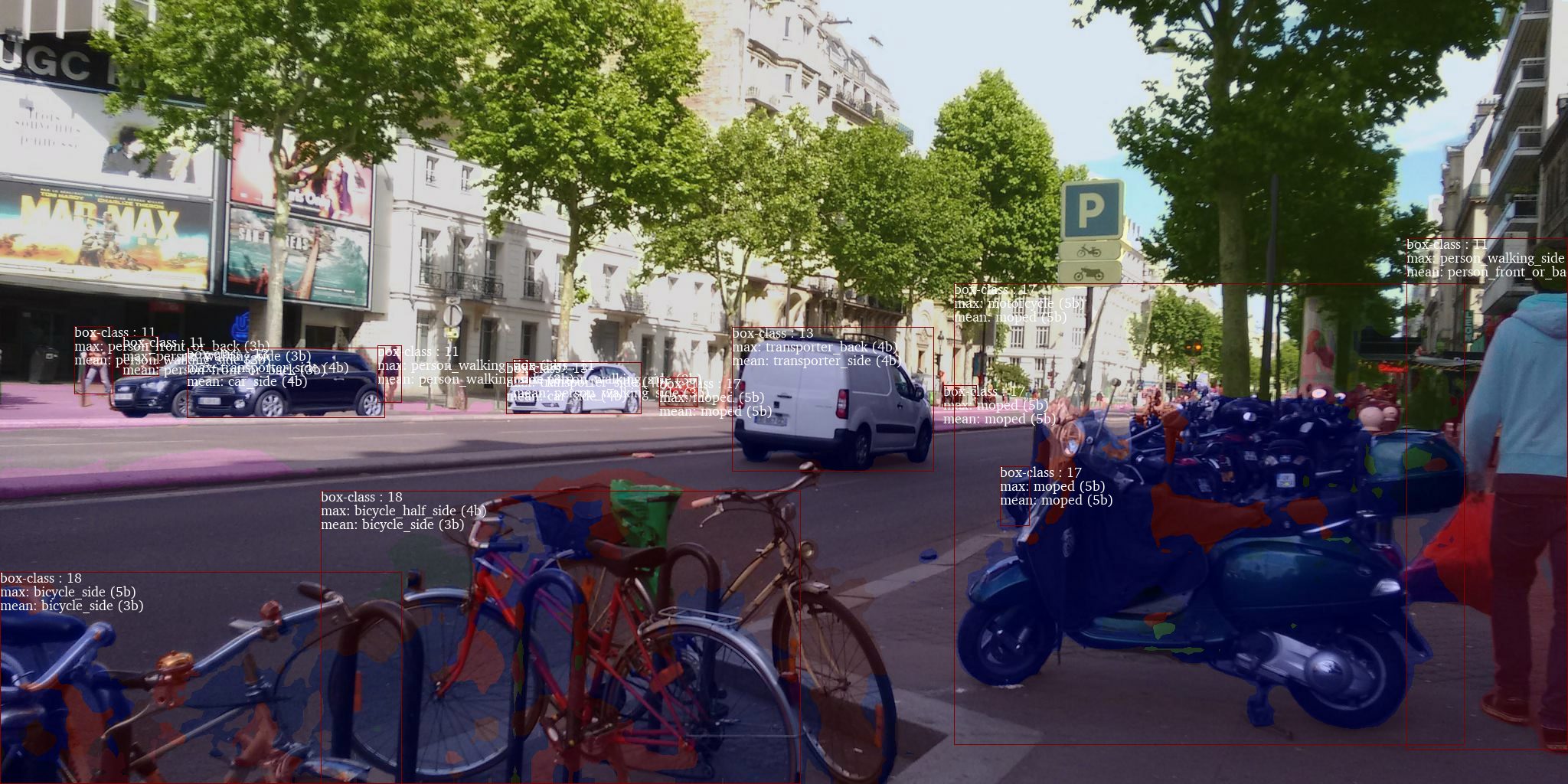

As part of a research group at Mercedes Benz (lead by Uwe Franke) that works on image understanding and environment perception for autonomous driving, I trained deep convolutional neural networks for semantic image segmentation. In particular, using the Cityscapes data set, I developed algorithms for the interpretation and visualization of high-dimensional feature spaces that allow the system to predict subclasses of objects even though it has never been trained on them.

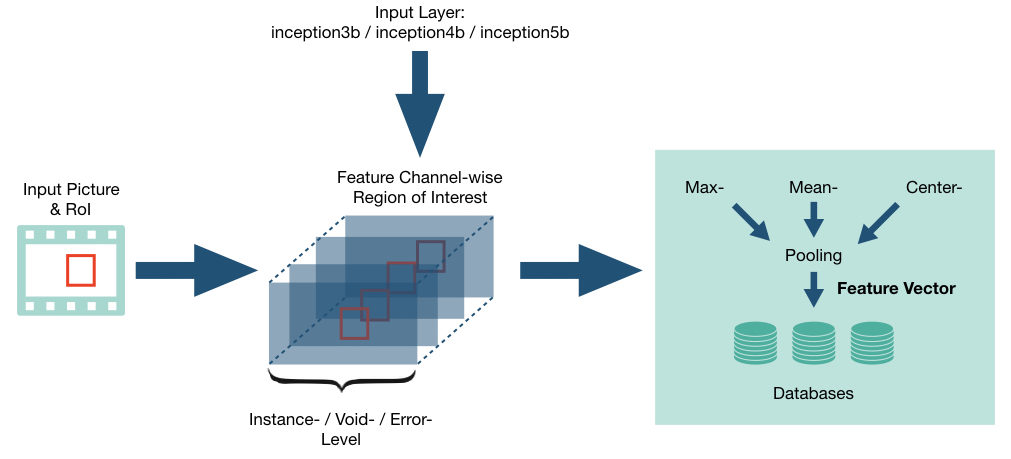

Overview: simplified overview of an extraction framework to create data bases for similarity searches of pooled feature vectors.

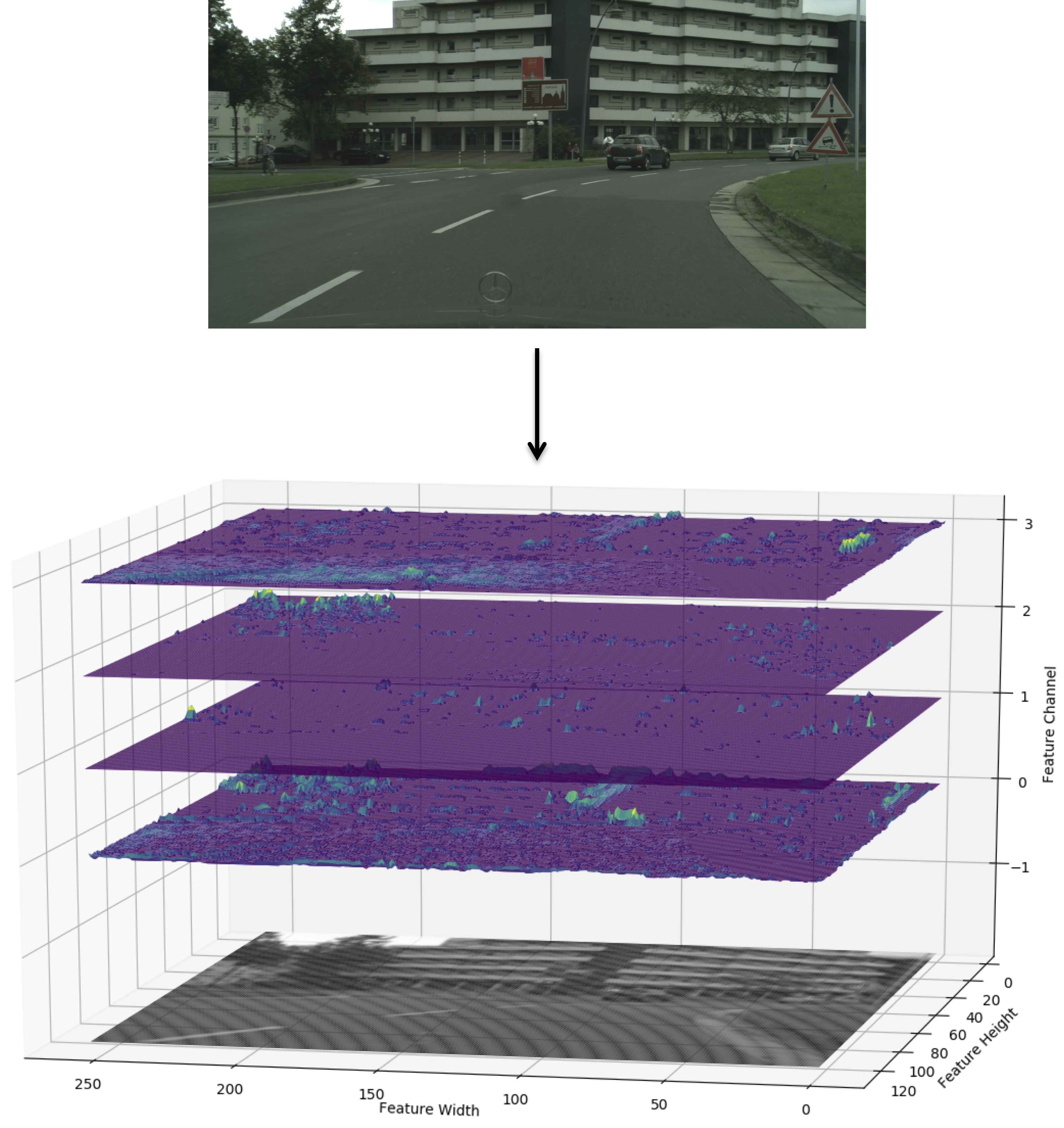

Example: Visualization of activations of different convolutional neural network layers.

Example: Predictions of subclasses by interpreting feature spaces from different network layers.